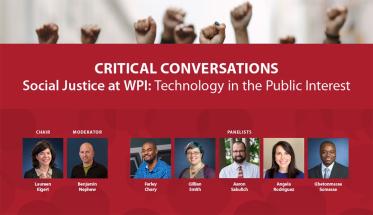

WPI kicked off the new academic year’s Critical Conversations series Oct. 7 with a virtual panel discussion, “Social Justice at WPI: Technology in the Public Interest,” in which selected faculty shared their thoughts and answered audience questions relating to how technology can sometimes be at odds with, and how it could better serve, social justice.

During her introduction, panel chair Laureen Elgert, associate professor, Social Science & Policy Studies, said that based on the Critical Conversation discussions over the past two years, social justice is a “clear priority” at WPI. Elgert organized the event with Yunus Telliel, assistant professor, Anthropology and Rhetoric.

“We’ve also heard that many have an interest in social justice and STEM. Today we bring these two areas together and examine them through a critical lens,” Elgert said. “Technology often serves some and leaves others behind.

Technology not always neutral

“Social justice shows how technology is not necessarily neutral nor fair. We’ve gained insight into the darker side of technology. Sometimes it magnifies and compounds social injustice.” She cited a recent CNN report that 8.6 million children in the U.S. lack the resources for online learning, as one example.

The panel consisted of Farley Chery, assistant teaching professor, IMGD; Gillian Smith, associate professor, Computer Science and IMGD; Aaron Sakulich, associate professor, Civil and Environmental Engineering; Angela Rodriguez, assistant professor, Social Science & Policy Studies; and Gbetonmasse Somasse, assistant teaching professor, Social Science & Policy Studies.

The discussion was moderated by Ben Nephew, assistant research professor, Biology & Biotechnology.

A recurring theme was the need to more fully incorporate social justice into the WPI curriculum, particularly in those disciplines that lead to technological innovation, to ensure that technology of the future is ethical and serves society equitably. The university, and higher education as a whole, must be intentional, deliberate, and thoughtful about it in course design and execution.

Incorporating social justice into the classroom

Somasse said he tries to incorporate his interest in social justice into his classroom teaching. In addition to the issue of equality around the world and in the U.S., he tries to address the rapid development of technology and engage students in a discussion about regulation and how technology is used.

“Policy makers are responsible for writing laws, but don’t necessarily understand the technology. Technology specialists could be part of evaluating public policies. How do you impact public policy if you don’t understand the technology? We can help students understand with policy studies for engineering students. It can broaden their knowledge and skills.”

Technology won’t solve problems without the guiding hand of people who understand the needs and values of those it’s designed to serve. “I come at it from the perspective of a health psychologist,” said Rodriguez. “It’s not about how cool our technology is. We have to come at it from human interest.”

Smith cited automated algorithms that show bias, like some policing programs that result in the disproportional arrests of African American men, and said the solution is to ban them. “Mistakes have been made in algorithms in policing,” she said. “Yet there’s no federal commission” looking at this.

Enabling others through technology

Chery made the case that technology can help people realize their potential by representing them in ways they may not have considered, especially people of color. He recalled a student with idea for an animation who, when looking online was unable to find characters that looked like his family.

“When it was a problem for someone else, it clicked with me,” he said. Chery’s 4-year-old daughter likes the TV show "Doc McStuffins," about a 5-year-old black girl who is a doctor to her toys. “At end of the program they have actual female doctors talk. Ever since she was three, she’s called herself a doctor. That’s how she sees herself. My position is to enable others. I have to create as many characters of color as possible.”

In response to a question of how he would tie classroom discourse to public discourse, Chery said his project, putting all his characters out there, adds diversity to storytelling so people can see themselves in a different context.

Sakulich said that as an engineer he only became involved in social justice after coming to WPI and working on IQPs. “WPI is better at this than other places," he said. "I learned there is a social aspect to engineering.

"People solve problems. If we do not develop another piece of technology, the world could be a paradise. An IQP team working with stakeholders takes time, but results in more universally acceptable solutions.”

Unintentional discrimination

Technology can inadvertently discriminate against some if it works for a certain ethnic group and does not work for others, such as skin cancer tests that don’t detect warning signs for dark-skinned people, while working well for light skinned patients.

Rodriguez said such discrimination has direct health impacts. “This is a true public health threat. We need to understand foundational biology,” she said. “There is physiological damage from discrimination. … Public interest technology is an important step in achieving equality.

“We need to turn inward to see our biases. We need to look at every avenue to address where we find one.”

—Martin Luttrell