2024 Fall RBE Master's Capstone Presentation

5:30 p.m. to 9:00 p.m.

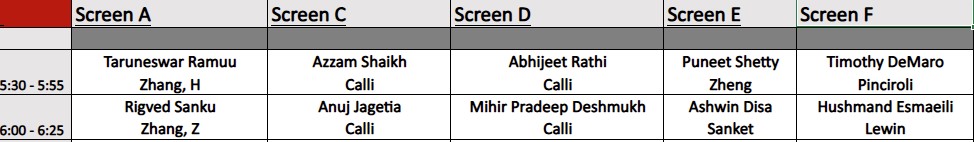

Screen A

Presenter: Taruneswar Ramuu - H. Zhang (5:30 -5:55)

Title: Ultrasonomyography - ML based Applications using WULPUS probe

Abstract: The WULPUS probe, a novel ultra-low power ultrasound device, acquires raw ultrasound data for customizable processing, enabling innovative applications in ultrasonomyography. This project leverages the WULPUS probe to classify finger gestures by processing ultrasound signals through a Convolutional Neural Network (CNN) pipeline. The CNN architecture is tailored to effectively handle raw ultrasound data, facilitating accurate recognition of gestures. Initial results demonstrate successful classification of five distinct finger gestures, with scalability to twelve gestures as a future goal. This work establishes a foundational framework for employing ultrasonomyography using the WULPUS probe in teleoperation systems and advanced medical devices, paving the way for further advancements in ultrasound based gesture recognition.

Presenter: Rigved Sanku - Z. Zhang (6:00 - 6:25)

Title: Extending and Analyzing XMFNet for Supervised Point Cloud Completion

Abstract: Point cloud shape completion addresses the challenge of reconstructing complete 3D shapes from partial and sparse point clouds. By leveraging multimodal data, such as auxiliary images and partial 3D point clouds, the objective is to accurately predict missing regions while preserving global structure and local details. This work reproduces and attempts to extend the state-of-the-art XMFNet architecture, exploring the impact of multimodal fusion for enhanced reconstruction quality.

Screen C

Presenter: Azzam Shaikh - Calli (5:30 - 5:55)

Title: Vision-based Load Prediction of Continuum Origami Robots for Manipulation Applications

Abstract: Continuum robots, with their inherent compliance and flexible structure, are well-suited for applications that require interactions with humans and delicate objects. One method of interaction can include manipulation, where a robot grasps an object or open drawers. In these manipulation contexts, the robot will experience external forces through contact with the environment, Due to these forces and the compliant nature of continuum structures, these robots will undergo shape deformations. In this project, we investigate the relationship between visual shape deformations observed for varying loads applied at the end effector in different parts of the continuum robot’s workspace. Specifically, we study the shape deformation of a single module of an origami inspired variable length continuum robot using images only. Experiments are performed to collect deformation data for various end effector loads in different parts of the robot’s workspace. Key findings reveal a strong relationship between applied weight and resulting deformation. This relationship, defined as a deformation constant, depends on the configuration of the robot and varies throughout the workspace. Through systematic testing, a model was developed that predicts the deformation constant for any configuration. From this model and any deformation that is applied, the load can be predicted, and vice versa. These results pave the way for controlling continuum robots for tasks which require load handling. The work contributes to the field of robotics by offering a systematic approach to developing a model for predicting end effector loads on a continuum robot.

Presenter: Anuj Jagetia - Calli (6:00 - 6:25)

Title: Dexteous In Hand Manipulation

Abstract: This research focuses on developing a novel vibration-enabled finger design to explore its impact on dexterous hand manipulation. The project includes creating a mechanical model of a robotic finger capable of generating controllable vibrations and investigating how these vibrations influence the interaction between the finger and objects on a surface without applying external forces. The study aims to understand the effects of vibration on object stability, movement, and orientation through experimental analysis.

Screen D

Presenter: Abhijeet Rathi - Calli (5:30 - 5:55)

Title: Object Rearrangement for Robotic Waste Sorting

Abstract: Efficient waste management requires innovative solutions, particularly in automating the waste sorting process. This project addresses the critical challenge by exploring robotic object rearrangement on conveyor belts, a vital step in automated sorting systems. The primary objective is to enhance the identification and segregation of waste items, enabling more efficient sorting. To this end, we propose and evaluate five distinct methodologies: K-Means Clustering, Principal Component Analysis (PCA), and Z Clustering, as well as two decision-based approaches, Decision Tree Points and Decision Tree Volumetric. These techniques are utilized to determine optimal start and end points for robotic arm trajectories to rearrange objects on the conveyor belt. Additionally, we explore how these methods can be adapted to focus on specific object colors and introduce modifications to incorporate height variations in the sweeping trajectories. This work aims to contribute to the development of robust and adaptive automated waste sorting systems.

Presenter: Mihir Pradeep Deshmukh - Calli (6:00 - 6:25)

Title: Skill Detection and Sequencing for Context-Aware manipulation in Multi-Object Environments

Abstract: This project addresses the challenge of robotic manipulation in complex scenarios involving objects that are small, flat, or obstructed by other items or environmental constraints. The proposed framework integrates two distinct models: a skill detection model and a context-aware skill sequencing model. The skill detection model, based on a dual-backbone Mask R-CNN, leverages RGB-D input to achieve over 90% accuracy in identifying four human-inspired dexterous manipulation skills. This model has been validated through testing on a real robot, demonstrating its effectiveness in real-world environments. The skill sequencing model, implemented using a Graph Neural Network (GNN), considers object relationships, environmental obstructions, and task-specific requirements to determine the optimal order of skill execution. By employing a compliant, under-actuated robotic hand, this framework ensures adaptability to diverse object shapes and sizes. Together, these models enhance the reliability and efficiency of robotic manipulation systems in dynamic, multi-object scenarios.

Screen E

Presenter: Puneet Shetty - Zheng (5:30 - 5:55)

Title: Automated Guidewire Navigation System

Abstract: The Automated Guidewire Navigation System represents a significant advancement in minimally invasive medical procedures, addressing the need for precise and efficient guidewire manipulation through complex vascular networks. This system leverages a semi-supervised, physically informed neural network (PINN) trained on the bending moments and dynamics of the guidewire to achieve real-time remote control and navigation. By integrating servo motor-based actuation for translation and rotation, alongside image-based feedback mechanisms, the system ensures high accuracy in trajectory prediction and movement execution. The guidewire navigation process is enhanced by data-driven insights, combining real-time image segmentation to extract the vessel and guidewire skeletons and spline-based interpolation for accurate path prediction. This innovation minimizes manual intervention, offering potential applications in remote surgical procedures and telemedicine. Developed with technologies including Python, PyTorch, OpenCV, and advanced hardware components like servo motors and custom 3D-printed assemblies, the system embodies a multidisciplinary approach. Preliminary results demonstrate its capability to navigate complex paths with precision, setting the stage for further refinement and clinical integration."

Presenter: Ashwin Disa - Sanket (6:00 - 6:25)

Title: EchoNav: Depth estimation for tiny aerial robot using ultrasound

Abstract: Accurate depth estimation is essential for autonomous navigation in constrained and challenging environments, particularly for tiny aerial robots with limited onboard sensing and computational resources. Vision-based depth estimation often fails in adverse conditions such as glass, smoke, or low-light scenarios. To overcome these limitations, this work investigates real-time depth map prediction using ultrasound sensors, leveraging their robustness in environments where vision sensors are unreliable. A custom 3D-printed data collection rig, equipped with 8 ICU30201 ultrasound sensors and 5 depth cameras, was developed to collect training data. A ResNet based convolutional neural network (CNN) is utilized to map ultrasound amplitude waveform to depth maps, with the latter represented as uint16 images providing metric depth in millimeters, sourced from stereo cameras. The system achieves real-time inference, enabling obstacle avoidance solely based on ultrasound data. Preliminary results validate the potential of ultrasound driven depth estimation, offering a lightweight and robust solution for aerial robotic perception in complex environments.

Screen F

Presenter: Timothy DeMaro - Pinciroli (5:30 - 5:55)

Title: Error Diagnosis in Kilobot Communication Swarms

Abstract: Swarm algorithms are often touted for require low computational power or requiring minimal data manipulation; however, diagnosing faulty robots operating with these naive algorithms can be very expensive, especially if the algorithm is prone to error cascades. We propose an error diagnosis algorithm for a malfunctioning Kilobot swarm suffering from constant, random hardware errors in affecting communication in a cascading manner. We discuss the relative lack of information in the problem to set as grounds for a solution, and that despite the limitation on data, it is very computationally costly to diagnose the swarm.

Presenter: Hushmand Esmaeili - Lewin (6:00 - 6:25)

Title: Development of Application for Control Algorithm Prototyping and Automated Testing with Hardware Integration

Abstract: In this project, we develop and improve a control system prototyping tool used for developing algorithms across a variety of home appliances and industrial applications. The tool facilitates communication with hardware components, including sensors, actuators, and embedded systems. However, its current tightly coupled architecture has limitations such as lack of modularity, complex user-defined scripting, and lack of scalability, which prevents efficient use by engineers, technicians, and developers. Our goal is to redesign the tool to be modular, scalable, and user-friendly, while ensuring easy integration with different hardware configurations. By improving its architecture, enhancing hardware interfacing capabilities, simplifying user interaction and reducing the learning curve, and developing better documentation and logging systems, we aim to create a standardized and reliable platform for hardware testing and efficient algorithm prototyping across teams.