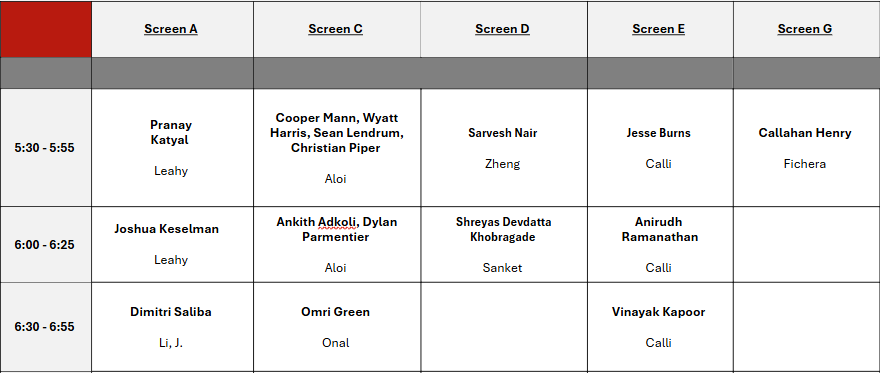

Fall 2025 RBE Master's Capstone Presentation Showcase

5:30 p.m. to 7:00 p.m.

Screen A

Presenter: Pranay Katyal (5:30 - 5:55) ~ Leahy

Title: Asynchronous protocol based Multi agent Formation and Tracking with CBFs

Abstract: Testing multi agent systems with decentralized GCBFs for safety and objectives of formation and tracking a target, all the while communicating using an asynchronous protocol.

Presenter: Joshua Keselman (6:00 - 6:25) ~ Leahy

Title: Adaptation of 'Temporal Logic Motion Planning in Unknown Environments' to a Real-World Robotic Platform

Abstract: Temporal logic specifications and model checking are powerful tools used in modern approaches for motion planning for robotic task completion. Using these specifications, complex high-level tasks can be formally defined and utilized to determine optimal paths. However, this approach traditionally assumes full knowledge of the workspace, allowing for offline path planning with only small adjustments required online to account for low-level task completion. In some real-world applications, such as search-and-rescue, robots must explore their environment simultaneously with completing user-specified tasks. In this project, I will adapt an existing theoretical approach to this problem to a real-world robotic platform, as well as seek to expand this approach with robustness against dynamic environments or flawed mapping.

Presenter: Dimitri Saliba (6:30 - 6:55) ~ J. Li

Title: Human Robot Interaction Nursing Simulator: Virtual Robot and Validation

Abstract: This project aims to create a simulation platform tailored towards testing and bench marking robots on Nursing and HRI related tasks. The simulation will allow for rapid prototyping, as well as quick reconfiguration of task environments. The simulation will be built in Unity, with tight ROS integration allowing for quick deployment from simulation to embodied robots, additionally the simulation will support mixed reality control interfaces. The simulation will be validated through a feature comparison to other currently available simulations, as well as a study designed to evaluate if the simulation can accurately predict Human Factors related metrics on the embodied robot.

Screen C

Presenter: Cooper Mann, Wyatt Harris, Sean Lendrum, and Christian Piper (5:30 - 5:55) ~ Aloi

Title: Physical Implementation and Engine for Chess Emulation (PIECE)

Abstract: Our project aims to develop an intelligent, physical chess board that bridges the gap between online and in-person play. The system integrates hardware and software components to detect player piece motion and respond by actuating magnetized computer player pieces to the desired positions. By combining a physical board with real-time analysis and adaptive, automatic computer movements, this project provides players with the benefits of both in-person and online gameplay.

Presenter: Ankith Adkoli and Dylan Parmentier (6:00 - 6:25) ~ Aloi

Title: Search and Rescue Implementation in Gazebo

Abstract: Millions of people across the world are impacted by natural disasters every year, costing billions of dollars in infrastructure damage. Rescue robotics has the potential to be an extremely impactful field in recovery efforts. This project aims to demonstrate the capabilities of research robotics by utilizing the simulation powers of Gazebo as well as the ROS2/Python engines. It will do this by navigating obstacles and terrain to demonstrate that a robot is a suitable and expendable replacement to humans in emergency response teams.

Presenter: Omri Green (6:30 - 6:55) ~ Onal

Title: Deformable Archimedes Drive

Abstract: Development and characterization of a fully 3D printed Archimedes drive with the capability of deforming the drive system to allow for steering and compression

Screen D

Presenter: Sarvesh Nair (5:30 - 5:55) ~ Zheng

Title: Correlating Operator Control Inputs to Guidewire Tip Trajectory A Foundation for Predictive Modeling

Abstract: Precise guidewire manipulation through tortuous vascular pathways is critical for the success of minimally invasive endovascular procedures. Predicting guidewire tip motion in response to operator commands remains a significant challenge, yet it is essential for improving navigational safety and enabling future automation. This work establishes a foundational framework for predictive modeling by correlating operator control inputs with the resulting guidewire tip trajectory. We introduce a deep learning model that learns the complex, dynamic relationships among operator actions, vessel geometry, and guidewire behavior. The proposed network architecture processes multimodal inputs: it leverages visual feedback to interpret the current guidewire shape and local vessel structure, while a separate module processes the operator's synchronized control inputs. This model is trained to predict the future trajectory(velocity) of the guidewire tip. By learning the underlying physics of the guidewire-vessel interaction, the network can anticipate critical navigational events, such as successfully advancing into a desired branch, buckling, or performing an unintended U-turn. The resulting model serves as a valuable component for developing future decision-support systems and improving robotic navigation within complex vasculatures.

Presenter: Shreyas Devdatta Khobragade (6:00 - 6:25) ~ Sanket

Title: Navigation in Zero-Lighting using Structured Lighting and Coded Apertures

Abstract: Autonomous aerial navigation in absolute darkness is crucial for post-disaster search and rescue operations, which often occur from disaster-zone power outages. Yet, due to resource constraints, tiny aerial robots, perfectly suited for these operations, are unable to navigate in the darkness to find survivors safely. In this paper, we present an autonomous aerial robot for navigation in the dark by combining an Infra-Red (IR) monocular camera with a large-aperture coded lens and structured light without external infrastructure like GPS or motion-capture. Our approach obtains depth-dependent defocus cues (each structured light point appears as a pattern that is depth dependent), which acts as a strong prior for our AsterNet deep depth estimation model. The model is trained in simulation by generating data using a simple optical model and transfers directly to the real world without any fine-tuning or retraining. AsterNet runs onboard the robot at 20 Hz on an NVIDIA Jetson OrinTM Nano. Furthermore, our network is robust to changes in the structured light pattern and relative placement of the pattern emitter and IR camera, leading to simplified and cost-effective construction. We successfully evaluate and demonstrate our proposed depth navigation approach AsterNav using depth from AsterNet in many real-world experiments using only onboard sensing and computation, including dark matte obstacles and thin ropes ( 6.25mm), achieving an overall success rate of 95.5% with unknown object shapes, locations and materials. To the best of our knowledge, this is the first work on monocular, structured-light-based quadrotor navigation in absolute darkness.

Screen E

Presenter: Jesse Burns (5:30 - 5:55) ~ Calli

Title: Dexterous Picking

Abstract: While picking challenging objects, humans apply various dexterous manipulation skills to their

advantage. For example, if the object is too thin, one can slide it to the edge of the table

surface or flip it using two fingers. Similarly, if the object is close to a vertical surface, pushing

the object towards that surface makes the picking operation easier at times. We are

implementing such skills for robots. Looking at a (cluttered) manipulation scene, the robot

should decide which skill is more appropriate and execute it successfully.

Presenter: Anirudh Ramanathan (6:00 - 6:25) ~ Calli

Title: Multi-Robot Centralization and Coordination for Automatic Waste Sorting

Abstract: Recycling sorting in the US still relies heavily on manual labor to separate materials, which increases operating costs and limits the throughput of recycling plants. There is a continued need to shift toward larger-scale processing and to increase the use of automation in recycling operations. The throughput of waste sorting can be significantly improved with an assembly-line style setup that uses multiple robotic systems working together to sort incoming materials. Multi-robot systems must coordinate their actions and operate in sync to make steady, effective progress on a shared task. Part of this semester's objective is to upgrade the current Automatic Waste Sorting setup in the lab, which is currently built around Arduino microcontrollers, into a more unified and collaborative robotic system using PLCs and Structured Text logic. The redesigned system will include operation and safety guarantees that comply with industry standards and provide a stronger foundation for collaborative robotic operation. Another objective of the project is to explore, design and compare effective scheduling algorithms that enable the multi-robot system to perform sorting tasks efficiently and to optimize the overall throughput of the system.

Presenter: Vinayak Kapoor (6:30 - 6:55) ~ Calli

Title: Ensemble learning for vision based antipodal grasping

Abstract: Vision-based antipodal grasping suffers from a critical generalization gap, where models with high dataset-level accuracy often fail in real world, out of distribution (OOD) conditions. This work addresses this sim2real gap by introducing an ensemble learning framework that unifies varied grasp generators to mitigate individual biases and enhance robustness. A key component of this methodology is the integration of the Generalized Intersection over Union (gIOU) as both a training objective and a primary evaluation metric. Extensive real world benchmarking experiments, synthetic OOD evaluations, and test-set analyses demonstrate two key findings: (1) the ensemble consistently outperforms its constituent models, and (2) the gIOU metric proves superior to the commonly used IOU for predicting grasp success. Our results establish ensemble learning and the use of gIOU as effective, combinable strategies for developing robust grasping systems.

Screen G

Presenter: Callahan Henry (5:30 - 5:55) ~ Fichera

Title: Development of a Teleoperated User Interface for Robotic Laser Surgery

Abstract: The goal of this project is to develop a user interface to control a robotic laser surgery system. The interface provides real-time control of the laser aiming, and it employs haptics to ensure the laser point of incidence remains on the desired surgical site. This system is designed in accordance with the leader-follower framework for teleoperation, and it consists of a haptic device, the lnverse3 (Haply, Canada), acting as the "leader", controlling a Panda robotic arm (the "follower") holding the laser, the AcuPulse CO2. The lnverse3 provides bounding virtual fixtures to ensure that the laser is not accidentally fired outside of a specified "safe" region. The system's accuracy and latency were validated through benchtop testing. The overarching goal of this research is to establish a new user interface that facilitates future robotic laser surgery research with intuitive real-time control.