While teachers nationwide are being asked to use data from tests to inform their classroom instruction and evaluate student performance, critics argue that the high-stakes, standards-based tests required by the federal No Child Left Behind Act are excessively administered, significantly reducing teaching time. But effective instruction and effective testing need not be mutually exclusive. In fact, they can be done simultaneously and effectively using powerful computerized tutors that can detect and respond to different learning styles.

That’s the conclusion of a paper by researchers at Worcester Polytechnic Institute (WPI) that recently received the James Chen Annual Award for the best paper published in 2009 in User Modeling and User-Adapted Interaction: The Journal of Personalization Research. The paper, by Mingyu Feng, a recent PhD recipient, and Neil Heffernan, PhD, associate professor of computer science, with Kenneth Koedinger, PhD, of the Human Computer Interaction Institute at Carnegie Mellon University, offers compelling evidence that students can receive feedback during exams while their knowledge is simultaneously tested, significantly improving both testing and learning.

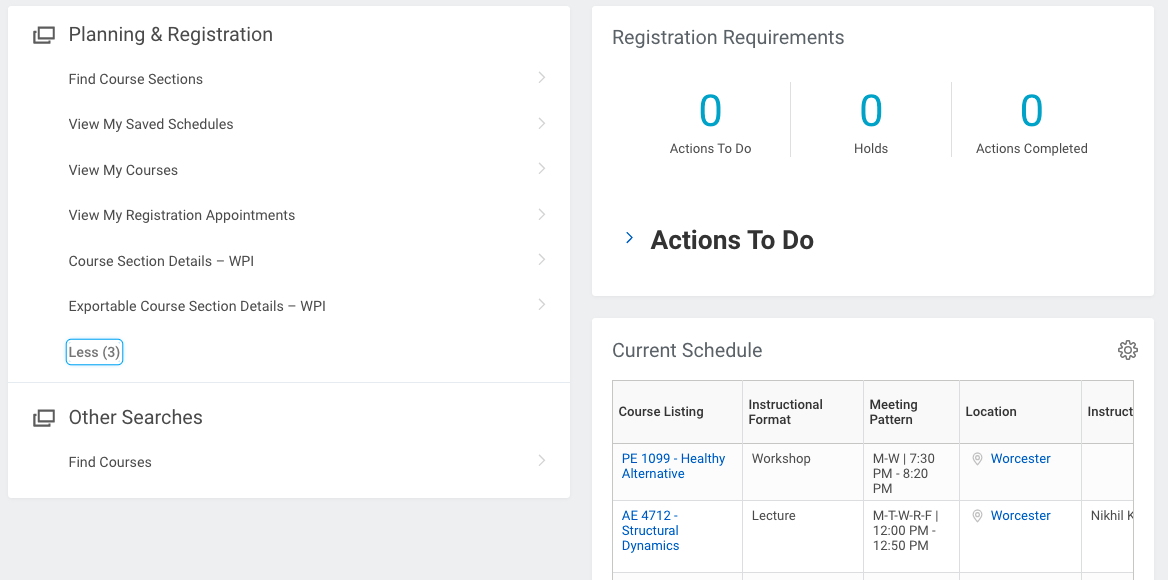

The award-winning paper, Addressing the Assessment Challenge with an Online System That Tutors as it Assesses, reports on a web-based mathematics tutoring system called ASSISTment, which Heffernan and his research team have developed over the past six years with more than $9 million in funding from the National Science Foundation, the U.S. Department of Education, and other agencies. By integrating assistance and assessment, ASSISTment can not only more effectively and efficiently evaluate students’ learning styles and knowledge, but also, by making testing part of the learning experience, reduce the need for excessive testing that cuts into instructional time, according to the authors. (Read more about ASSISTment.)

"Scientists who study how to measure student knowledge normally think that the best way to assess students is to not give students any feedback during the test," said Heffernan. "They assume that it is harder to measure a moving target. That is, if students are allowed to learn during the test, then their knowledge level obviously changes even as the test is being conducted.

"However, our research shows that you can better assess student knowledge with ASSISTment because the program makes use of the additional information it gathers as students use it, such as how many hints students need to solve problems, how many attempts they make to solve problems, and how much time they need to answer questions," he added. "The program gathers that information even as it ’tutors’ students, helping them to learn by feeding them information based on what it discovers from their input."

The paper reports on an analysis of results obtained over the past few years in several public schools using the ASSISTment mathematics tutoring program. "Traditional assessment usually focuses on students’ responses to test items and whether they are correctly or incorrectly answered," explained Heffernan. "It ignores all other student behaviors during the test. In our research, we took advantage of a computer-based tutoring system to collect extensive information while students interact with the system."

Each week, as students work on the ASSISTment website, the system "learns" more about each student’s abilities, and thus it can provide increasingly accurate predictions of how the students will do on a standardized test. At the same time, it helps students work through tough problems by breaking them into sub-steps and posing prompting questions that aim to lead the students to solve the problem. All the while ASSISTment collects data related to different aspects of student performance such as accuracy, speed, and help-seeking behavior.

"ASSISTment functions as a tutor while simultaneously gathering rich data that illuminate the students’ learning styles and from which various reports can be prepared for individual students, for teachers, and for other stakeholders—including parents—who need to understand how students are performing," said Heffernan, whose dynamic assessment tutoring programs have been used for free since 2004 by a dozen public school systems in Massachusetts, New Hampshire, Maine, and North Carolina.

"Our research shows that schools can turn many of their current tests into learning experiences," Heffernan said. "These tutoring programs enable schools to do less testing in which students don’t get feedback, and yet still conduct valid assessments. They make it possible to do better assessments, and they also address critics’ arguments that testing takes too much time. With ASSISTment, kids learn during the test, so testing time is never wasted and fewer tests are actually required."