A researcher at Worcester Polytechnic Institute (WPI) is using computer science to help fight the growing problem of crowdturfing—a troublesome pheneomon in which masses of online workers are paid to post phony reviews, circulate malicious tweets, and even spread fake news. Funded by a National Science Foundation CAREER Award, assistant professor Kyumin Lee has developed algorithms that have proven highly accurate in detecting fake “likes” and followers across various platforms like Amazon, Facebook, and Twitter.

Crowdturfing (a term that combines crowdsourcing and astroturf, a fake grass) is like an online black market for false information. Its consequences can be dangerous, including customers buying products that don’t live up to their artificially inflated reviews, malicious information being pushed out in fake tweets and posts, and even elections swayed by concerted disinformation campaigns.

“We don’t know what is real and what is coming from people paid to post phony or malicious information,” said Lee, a pioneer in battling crowdturfing who said the problem can undermine the credibility of the Internet, leaving people feeling unsure about how much they can trust what they see even on their favorite websites.

“We believe less than we used to believe,” he said. “That’s because the amount of fake information people see has been increasing. They’re manipulating our information, whether it’s a product review or fake news. My goal is to reveal a whole ecosystem of crowdturfing. Who are the workers performing these tasks? What websites are they targeting? What are they falsely promoting?”

Kyumin Lee

Lee, who joined WPI in July, focuses first on crowdsourcing sites like Amazon’s Mechanical Turk (MTurk), an online marketplace where anyone can recruit workers to complete tasks for pay. While most of the tasks are legitimate, the sites have also been used to recruit people to help with crowdturfing campaigns. Some crowdsourcing sites try to weed out such illegitimate tasks, but others don’t. And the phony or malicious tasks can be quite popular, as they usually pay better than legitimate ones.

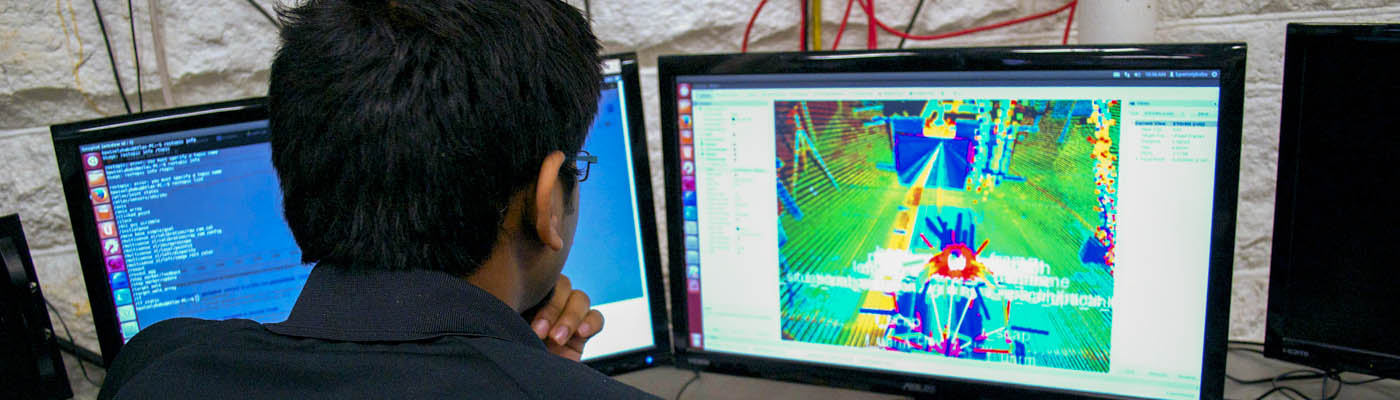

Using machine learning and predictive modeling, Lee builds algorithms that sift through the posted tasks looking for patterns that his research has shown are associated with these illegitimate tasks: for example, higher hourly wages or jobs that involve manipulating or posting information on particular websites or clicking on certain kinds of links. The algorithm can identify the malicious organizations posting the tasks, the websites the crowdturfers are told to target, and even the workers who are signing up to complete the tasks.

Looking next at the targeted websites, the predictive algorithms can gauge the probability that new users are, in fact, there to carry out assigned crowdturfing tasks—for example “liking” content on social media sites or “following” certain social media users. In Lee’s research, the algorithms have detected fake likes with 90 percent accuracy and fake followers with 99 percent accuracy.

While he hasn’t specifically researched fake news, Lee said bots are not the only things pushing out propaganda and misleading stories online. People can easily be hired on crowdsourcing sites to spread fake news articles, increasing their reach and malicious intent.

“The algorithm will potentially prevent future crowdturfing because you can predict what users will be doing,” he added. “Hopefully, companies can apply these algorithms to filter malicious users and malicious content out of their systems in real time. It will make their sites more credible. It’s all about what information we can trust and improving the trustworthiness of cyberspace.”

Lee’s work is funded by his five-year, $516,000 NSF CAREER Award, which he received in 2016 while at Utah State University. In addition, in 2013 he was one of only 150 professors in the United States to receive a Google Faculty Research Award; that $43,295 award also supports his crowdturfing research.

Before turning his attention to crowdturfing, Lee conducted research on spam detection. One of his next goals is to adapt his algorithms to detect both spam and crowdturfing. He said crowdturfing is more difficult to detect because, for example, a review of a new product can look legitimate, even if it’s been bought and paid for. “The ideal solution is one method that can detect all of these problems at once,” he said. “We can build a universal tool that can detect all kinds of malicious users. That’s my future work.”

Lee added that he expects to make his algorithms openly available to companies and organizations, which could tailor them to their specific needs. “I expect to share the data set so people can come up with a better algorithm, adapted for their specific organization,” he said. “When they read our papers, they can understand how this works and implement their own system.”

Lee has been assisted in his research by WPI computer science graduate students Thanh Tran and Nguyen Vo.