Startlingly competent generative artificial intelligence may have burst into the public consciousness in the past year or so, but the technology’s underpinnings have been in place for decades, and AI has become an integral part of our lives—in our homes and cars, on our phones, and in our doctors’ offices.

The intersection of healthcare and AI served as the backdrop for the Critical Conversation series at WPI’s fall Arts & Sciences Week. While the discussion at the Rubin Campus Center was driven by practical applications in hospital settings and ethical implications for patient safety and equitable care, the wider conversation about AI will continue at WPI, said President Grace Wang. WPI will explore new research questions, degree programs, academic technologies, and other opportunities related to AI, she said.

“Taking no action is not going to be an option for us as a STEM institution,” Wang said. “In fact, our expertise in the field makes us a natural fit to lead on educating the next generation of AI experts.”

Jean King, WPI Peterson Family Dean in the School of Arts and Sciences, served as emcee for the event, which she said was intended to foster open dialogue and discussion about pressing issues of our time. The panel included the following:

Elke Rundensteiner, William B. Smith Professor of Computer Science

Emmanuel Agu, Harold L. Jurist ’61 and Heather E. Jurist Dean’s Professor

Carolina Ruiz, Harold L. Jurist ’61 and Heather E. Jurist Dean’s Professor

Edwin Boudreaux, professor of emergency medicine and co-director of the Center for Accelerating Practices to End Suicide through Technology Translation at UMass Chan Medical School

Dr. David McManus, the Richard M. Haidack Professor of Medicine and chair and professor of medicine at UMass Chan Medical School

Below are thoughts from each panelist:

Rundendsteiner, on ensuring people know how AI is being used for decisions that impact their lives:

I’m interested in AI and understandability. When we make a decision using AI, can the person the decision is being made for understand why that decision is being made, and for what reasons? Why is a bank telling someone, for example, that a loan is being denied? The person might not know all the data the bank is reviewing and why. Or, why is a doctor recommending a certain medical procedure? How does this person know what AI system led to that decision?

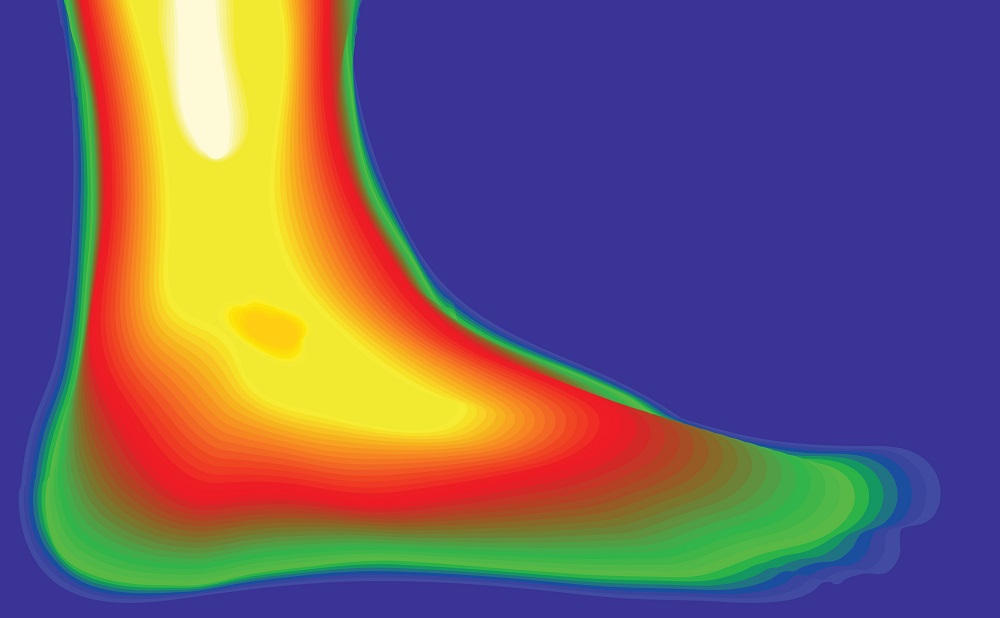

Agu, on how AI will expand access to quality care:

The potential to democratize healthcare through AI is there. There are many people out there who could never meet a cardiac surgeon because they live in a part of the world where they don’t have access to those types of health professionals. But with AI, we’re able to train models that learn from these experts and push that knowledge out to the edges—push it out to people who have never had access. Essentially, a person from a remote part of America, or in a developing country, can access the best expertise in the world because the models we train are based on the labels generated from experts who are the most knowledgeable in the world.

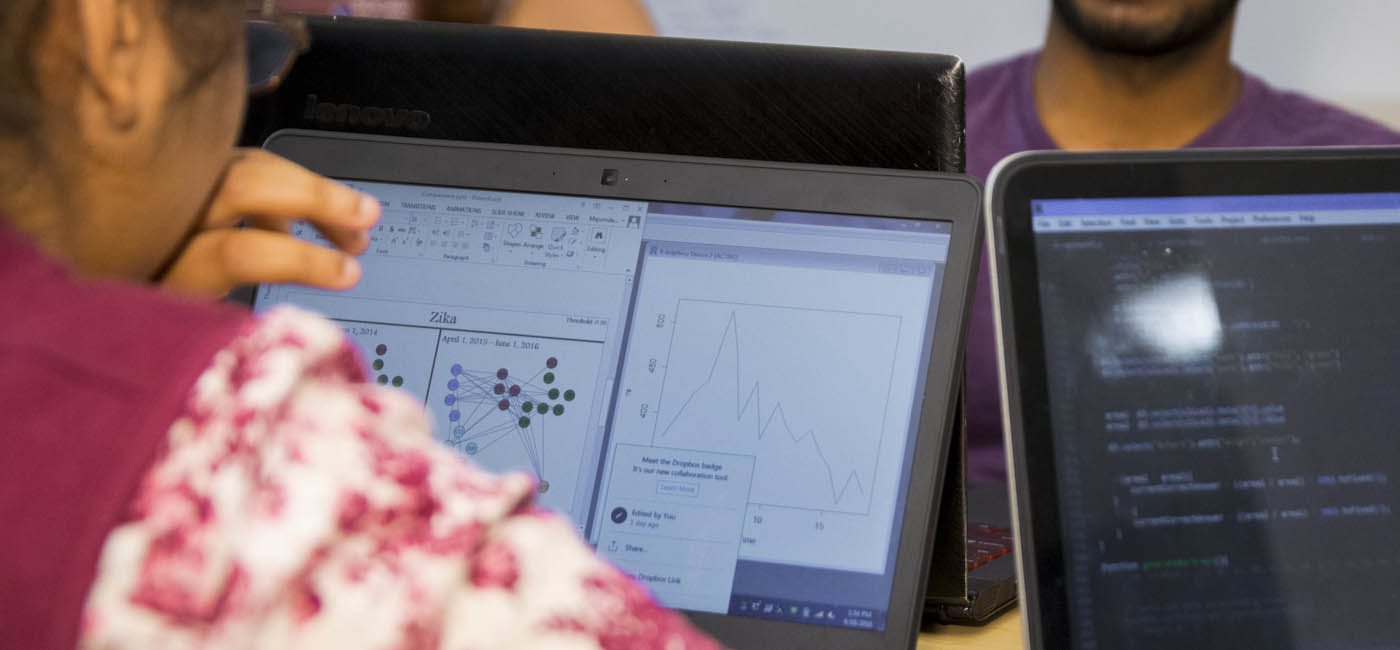

Ruiz, on using AI to help treat sleep disorders:

Sleep medicine is an amazing field to be in, because sleep is a thing we all have a connection with, an experience with. In a traditional sleep study, someone will come and stay overnight, and we collect lots and lots of signals—their brain activity, breathing, heart activity, temperature, and muscle movement. We are producing data and using AI to create deep learning models that are able to predict and help diagnose sleep disorders, which can be important in diagnosing other aspects of people’s health.

Boudreaux, on the role AI can play in suicide prevention:

Think about your own lives–if you’re like other groups of people, the majority of you knew someone who has died by suicide. The tragedy is that among all those people, the majority were seen in a healthcare setting within a year before they died. Thirty-five percent saw a healthcare provider a month before they died, and the vast majority were not identified as at-risk. We have opportunities to intervene, but we don’t act because clinicians are bad at identifying risk. Machine learning models are good at identifying risk, because that’s what they’re designed to do: predict who may be most vulnerable and most at-risk for suicide. This is what we’re working on in our center: building better models to help us identify when an individual is at-risk, monitor that risk over time, and cue clinicians to act at the right time with the right intervention to prevent suicide.

McManus, on AI’s potential to improve healthcare outcomes:

When I’m in clinic, a patient will see me for, say, high blood pressure, or they’d had a heart attack. I’ll typically I look at their electronic health record—I’ll look at a patient’s last few blood pressures, their history, their medicines list. I’m doing homework before I see the patient, which I think is standard practice and best practice. The truth is, though, there are billions of data points within that electronic health record on that individual. And to be frank, in my 15 minutes of homework I may miss a laboratory test that was abnormal, or a record from an outside hospital, if the patient doesn’t tell me about that. It shows where AI would be a wonderful co-pilot for well-meaning physicians to take care of their patients.

Watch video of the forum here.